Hello world with Flume and HDFS

In this blog we will setup and install flume and then ingest data into flume. We will do an exercise without hdfs and then do an exercise with HDFS.

Prerequisites

- Working hdfs

- Basic knowledge of Unix

Versions Used for this exercise

- apache-flume-1.6.0-bin.tar.gz

- java version “1.7.0_75”

- Hadoop 2.6.3

Download Install and Setup

- https://flume.apache.org/download.html

- tar -tvf apache-flume-1.6.0-bin.tar.gz

- ln -s apache-flume-1.6.0-bin flume

Setup configuration flume-env.sh

http://www.tutorialspoint.com/apache_flume/apache_flume_environment.htm

- $ cd ~/flume/conf

- $ cp flume-env.sh.template flume-env.sh

- Setup Java Home

- export JAVA_HOME=/home/Hadoop/jdk

- You have to know where java is installed

- $ vi ~/flume/conf/flume-env.sh

- export JAVA_HOME=/home/hadoop/jdk

Setup configuration flume-conf.properties

- $ cp flume-conf.properties.template flume-conf.properties

- We will copy the settings from the user guide and make minimal changes

- https://flume.apache.org/FlumeUserGuide.html

$ vi flume-conf.properties a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

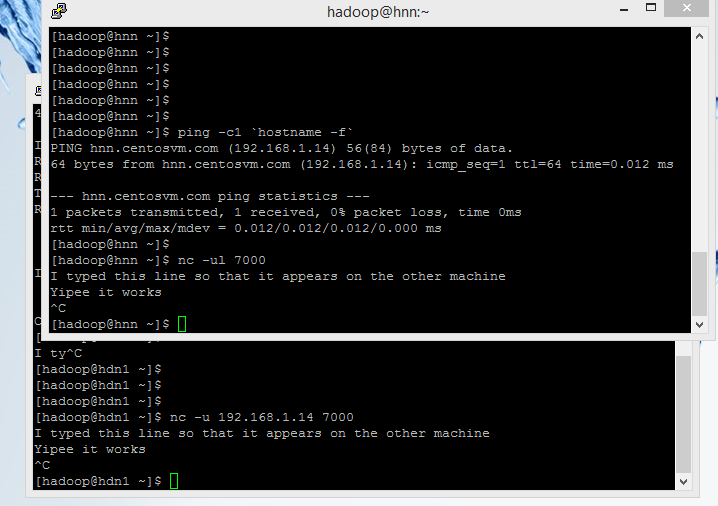

Before we run flume, test netcat:: PS: what is netcat?

https://en.wikipedia.org/wiki/Netcat

- Allows machines to communicate using ports example. Please read up full details in Wikipedia.

- If netcat is not installed use “sudo yum install nc.x86_64” to install it

- Test and understand netcat (See screen shot below or follow the instructions in wikipedia)

Netcat Sender

netcat -u 7000

Netcat Listener

netcat -ul 7000

Start Flume

$ ~/flume/bin/flume-ng agent --conf ~/flume/conf --conf-file ~/flume/conf/flume-conf.properties --name a1 -Dflume.root.logger=INFO,console

Install telnet if your machine does not have telnet

- $ sudo yum install telnet

- [sudo] password for hadoop:

- :::::::

- Installed:

- x86_64 1:0.17-48.el6

- $

Feed input via netcat

- To test flume we will send messages to Flume using netcat it should dump it to log using Log4J

- $ telnet 168.1.14 44444

- -bash: telnet: command not found

- Install Telnet using above Steps

- $ telnet localhost 44444

- Connected to localhost.

- Escape character is ‘^]’.

- I am typing this line .. it should apprear in the log.

- OK

- Yes!

- OK

Output will be visible on the window where flume is running

- 2016-01-15 00:19:41,485 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO – org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 49 20 61 6D 20 74 79 70 69 6E 67 20 74 68 69 73 I am typing this }

- 2016-01-15 00:20:05,351 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO – org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{} body: 59 65 73 21 20 0D Yes! . }

Test flume using Hadoop/HDFS

Here is the Configuration file used to test Haddop/hdfs

$ cat ~/flume/conf/flume-conf.properties a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path = hdfs://192.168.1.17:9000/flume/webdata a1.sinks.k1.hdfs.fileType = DataStream a1.sinks.k1.hdfs.writeFormat = Text # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

File did not exist in HDFS before Running Flume:

- $ hdfs dfs -ls hdfs://192.168.1.14:8020/flume/webdata

- ls: `hdfs://192.168.1.14:8020/flume/webdata’: No such file or directory

- $

Run Flume:

$ ~/flume/bin/flume-ng agent -–conf ~/flume/conf -–conf-file ~/flume/conf/flume-conf.properties -–name a1 -Dflume.root.logger=INFO,console

Sent Output via netcat which gets stored in hdfs:

- $ telnet localhost 44444

- Connected to localhost.

- Escape character is ‘^]’.

- happy

- OK

- 12345

- OK

- end

- OK

- ^]

- telnet> quit

- Connection closed.

- $

Validate output in HDFS:

- $ hdfs dfs -ls hdfs://192.168.1.14:8020/flume/webdata

- -rw-r–r– 1 hadoop supergroup 18 2016-01-15 17:10 hdfs://192.168.1.14:8020/flume/webdata/FlumeData.1452895815880

- $

- $ hdfs dfs -cat hdfs://192.168.1.14:8020/flume/webdata/FlumeData.1452895815880

- happy

- 12345

- end

- $

Author: Pathik Paul